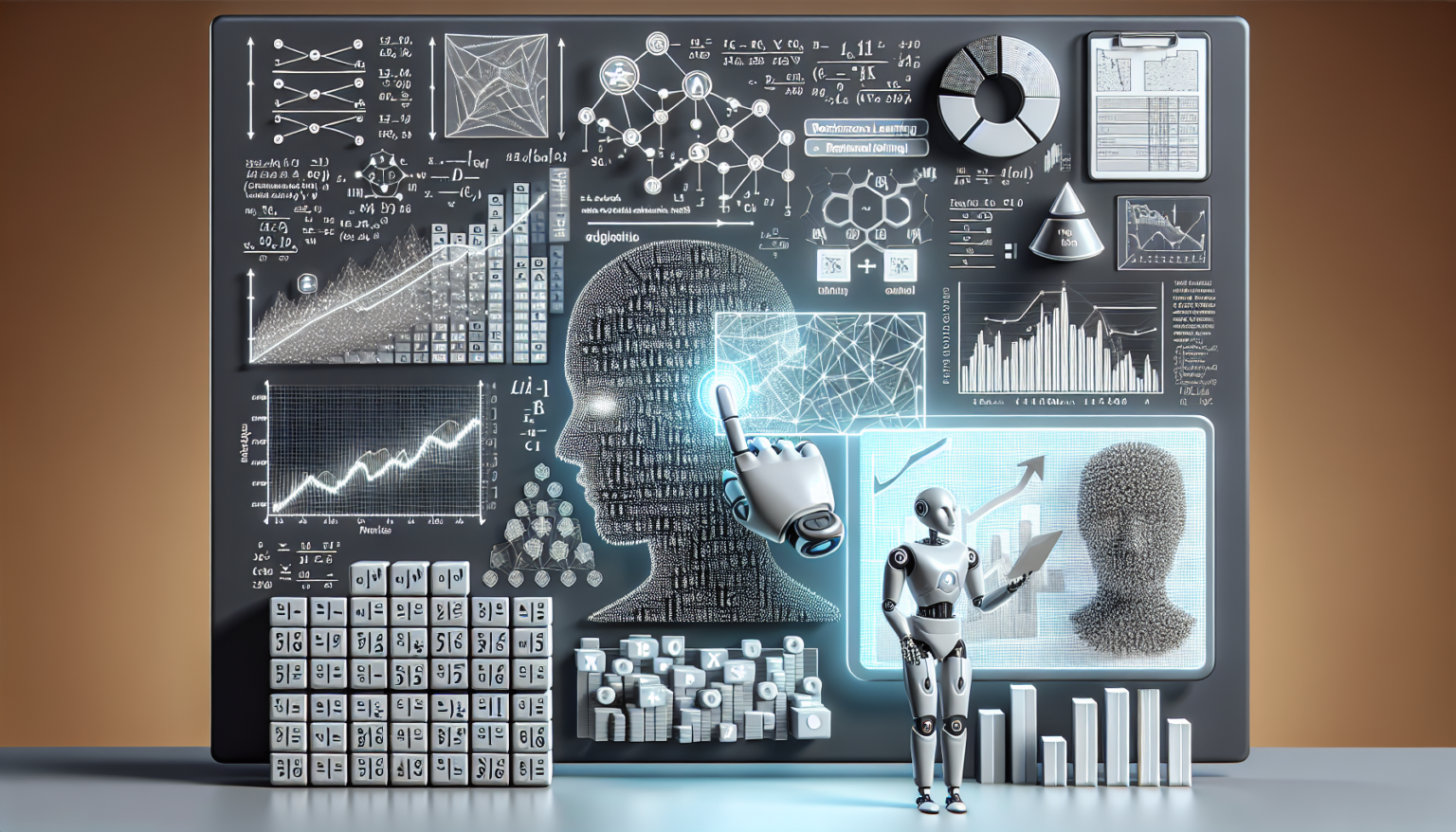

Understanding Reinforcement Learning in Automated Trading

What is Reinforcement Learning?

Reinforcement Learning (RL) is a subset of machine learning where agents learn to make decisions through trial and error. This model-free approach allows the agent to learn optimal behaviors for different situations. By receiving rewards or penalties based on its actions, the agent gradually improves its decision-making strategies over time.

Why Use Reinforcement Learning for Trading?

Financial markets are complex and dynamic, characterized by uncertainty and rapid changes. Traditional algorithms often struggle to adapt quickly to these fluctuations. Here’s where RL comes in. With its ability to learn from a myriad of market conditions, it enables traders to develop strategies that can adapt over time, potentially leading to improved trading performance.

Features of Reinforcement Learning that Benefit Trading

1. **Continuous Learning:** RL can continuously learn from new data, allowing automated trading systems to adapt to changing market conditions without human intervention.

2. **Exploration vs. Exploitation:** RL thrives on the tension between exploration (trying new strategies) and exploitation (using known strategies). This characteristic is crucial in volatile markets.

3. **Long-Term Reward Thinking:** Unlike traditional methods that may focus on short-term profits, RL considers long-term consequences, allowing for strategic thinking that can maximize returns over extended periods.

Implementing Reinforcement Learning in Trading Strategies

The Key Components of an RL System

To harness RL for trading, you need to understand its fundamental components:

1. **Agent:** In trading, the agent is the model you create, which learns to make trading decisions based on the environment.

2. **Environment:** The trading market acts as the environment in which the agent operates. Here, it receives data, makes decisions, and observes the results.

3. **Actions:** These are the choices available to the agent—buy, sell, hold, or any other trading actions that may be part of the strategy.

4. **Rewards:** The reward system is vital; it quantifies the results of the agent’s actions. A positive reward could be a profit, while a negative reward may come from a loss.

Steps to Build an RL Model for Trading

Step 1: Define the Trading Environment

It’s crucial to create a realistic simulation of the market. This involves determining what data will be used—like stock prices, volume, or indicators—and how the agent will interact with this data. Historical data can be particularly useful for training.

Step 2: Choose the Right Algorithm

Several RL algorithms can be employed, such as Q-learning, Deep Q-Networks (DQN), or Proximal Policy Optimization (PPO). Each has its own strengths, often defined by the complexity of the trading strategy and the computational resources available.

Step 3: Train the Model

Training the model involves running multiple simulations where the agent learns from its experiences. Here, you’ll want to ensure that the agent has enough diverse situations to learn effectively, balancing between exploration and exploitation.

Step 4: Backtesting

Once trained, the model should undergo backtesting, where it’s tested against historical data to evaluate its performance. This is a critical step to ensure the model is robust before deploying it into live trading scenarios.

Step 5: Live Trading

After rigorous testing, the agent can be started in a live environment. However, it’s essential to monitor its performance meticulously to make necessary adjustments quickly.

Challenges of Using Reinforcement Learning in Trading

Data Quality and Quantity

The effectiveness of an RL model often hinges on the quality and quantity of data used for training. Incomplete or noisy data can lead to poor model performance.

Overfitting

One significant risk is overfitting, where the model performs well on historical data but poorly on unseen data. This happens when it learns noise rather than actual patterns. Balancing complexity and generalization is key in avoiding this pitfall.

Computational Requirements

Training RL models can be computationally intensive. Depending on the complexity of the strategy, the resources available may significantly affect the training speed and efficiency.

Market Changes

Financial markets are not static; they evolve. A model trained on past data may not perform well in a new market environment, necessitating constant retraining and adaptation.

Monitoring and Maintaining Your RL Trading System

The Importance of Continuous Learning

A successful RL trading system does not remain stagnant. It should continuously learn from new data, adjusting its models as market conditions shift. Implementing online learning algorithms can help in this aspect, where the agent updates its strategy based on real-time data.

Performance Metrics

Monitoring performance is crucial to understanding the effectiveness of your RL model. Common metrics include:

– **Annualized Return:** This gives a clear picture of profitability over time.

– **Sharpe Ratio:** This measures risk-adjusted returns to see if the returns are worth the volatility.

– **Maximum Drawdown:** Understanding how much capital one could have lost at any point in time is essential for risk management.

Regular Reviews

Routine analysis of the strategies and market conditions is fundamental. This can unearth insights about when to retrain or re-evaluate the model and ensure it remains aligned with overall trading goals.

Future of Reinforcement Learning in Trading

Technological Advancements

As technology progresses, so too will the methods and techniques used in RL trading. Innovations in computing power, data analytics, and algorithm refinement promise incredible possibilities for future trading systems.

Integration with Other Strategies

Combining RL with traditional trading strategies, such as technical analysis or fundamental analysis, may create hybrid models that capitalize on the strengths of both worlds, further increasing trading efficacy.

Broader Market Applications

Outside of equities, RL can find its place in other financial instruments like forex, options, and cryptocurrencies. The adaptability of RL extends its applicability across various market conditions and instruments.

In summary, leveraging reinforcement learning presents a robust framework for navigating the intricate world of automated trading. With the right approach and ongoing adjustments, traders can potentially unlock new levels of success in their trading endeavors.